What is the value of consumer-generated content? How do shoppers behave on different devices? These are the types of questions the Analytics team strive to answer by doing what we do best – digging through troves of data. Lately, we’ve been thinking about the relationship between two of the most basic metrics: average rating and the number of reviews a product has. Let’s drill down a little deeper and demonstrate how to use this available data to gain a more complete perspective on consumer sentiment.

The Stabilization Threshold

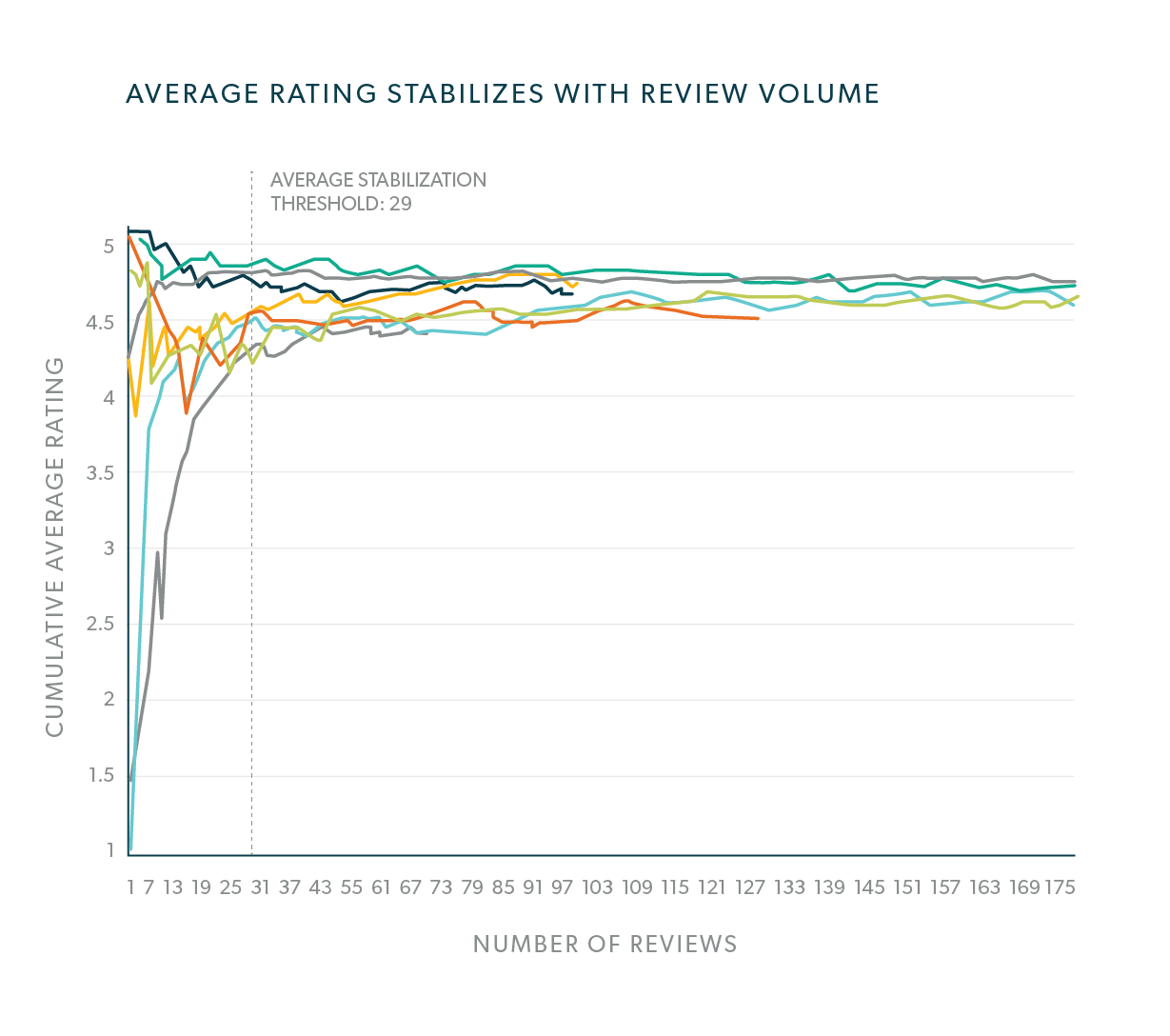

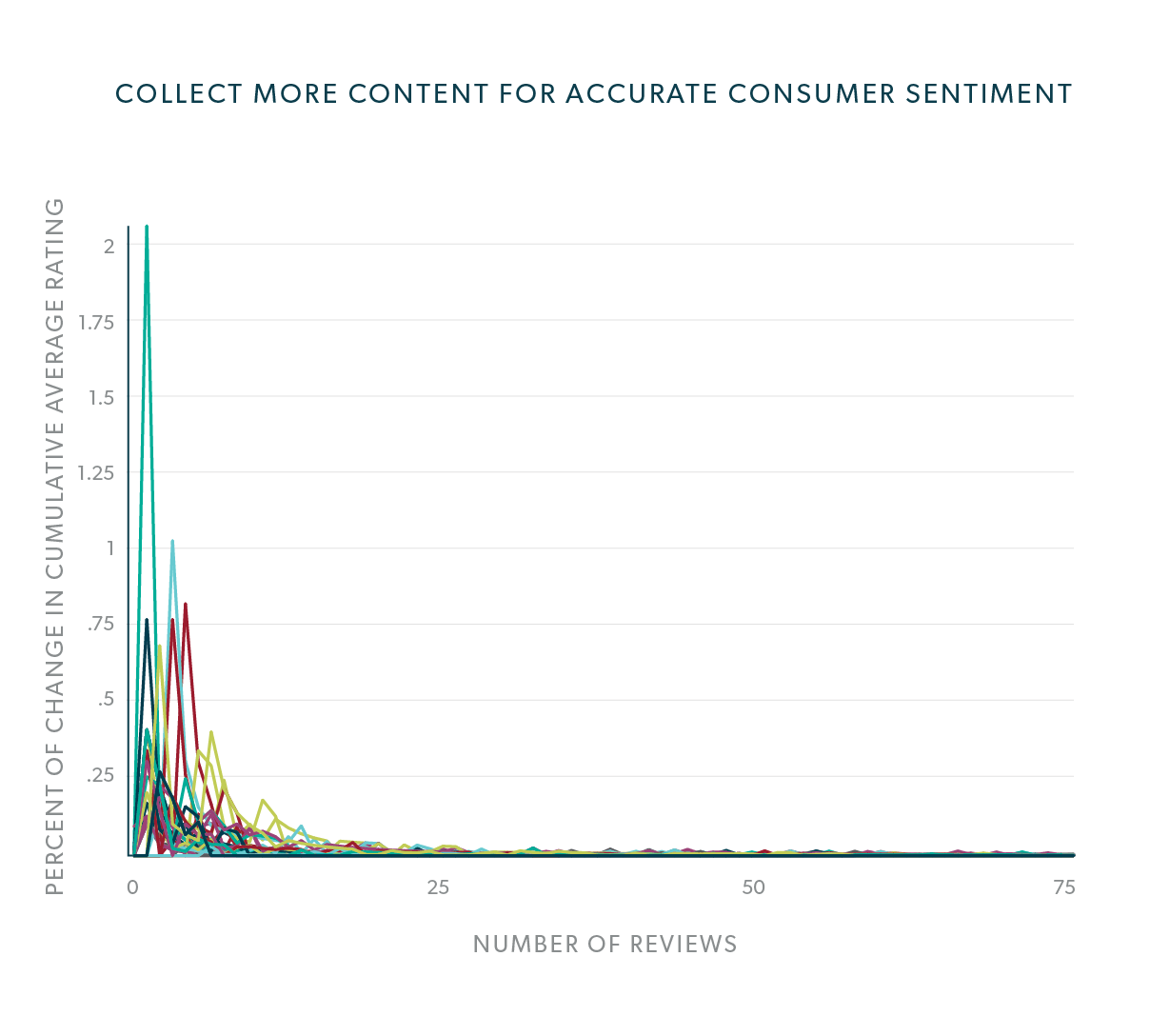

Average rating is volatile early on, but stabilizes with more review volume. Our data shows that when a product begins to collect its first few reviews, the product’s average rating is highly volatile. After obtaining a certain number of reviews, it then takes significantly more reviews to change the average rating by a noticeable amount. We call this level the stabilization threshold.

As a product collects more and more reviews, the rate of change, or volatility, of its average rating decreases exponentially. This volatility eventually contracts to a point where each new review has little effect on the product’s average rating. At this point, the average rating has been stabilized.

As a product collects more and more reviews, the rate of change, or volatility, of its average rating decreases exponentially. This volatility eventually contracts to a point where each new review has little effect on the product’s average rating. At this point, the average rating has been stabilized.

Understanding whether or not the average rating has stabilized is important because this can help you determine if you’ve collected enough feedback to make informed decisions with. For example, if you launched a brand new product and its first 20 reviews yielded an average rating of 2.0 stars — don’t worry just yet. You may not have collected enough content to know at a large enough scale how customers really feel about the product. Conversely, if your product is rated 2.0 stars after stabilizing with 100 reviews, it might be time to pull the product off the shelf and send the insights gleaned from the review text back to your R&D department for product improvements. There’s no shame in conceding – consumers love when you take their feedback into consideration to fix your product or bring back an older (but better) version. Learn more about how QVC uses CGC to uncover product flaws here.

Understanding whether or not the average rating has stabilized is important because this can help you determine if you’ve collected enough feedback to make informed decisions with. For example, if you launched a brand new product and its first 20 reviews yielded an average rating of 2.0 stars — don’t worry just yet. You may not have collected enough content to know at a large enough scale how customers really feel about the product. Conversely, if your product is rated 2.0 stars after stabilizing with 100 reviews, it might be time to pull the product off the shelf and send the insights gleaned from the review text back to your R&D department for product improvements. There’s no shame in conceding – consumers love when you take their feedback into consideration to fix your product or bring back an older (but better) version. Learn more about how QVC uses CGC to uncover product flaws here.

If your product’s average rating has stabilized at 4.5 stars: Congratulations, you’ve launched a great product! But now you know it’s great, because the data supports this. But don’t stop now. Even if you’ve reached stabilization, don’t rest on your laurels. A previous study shows that higher review volume is associated with an increase in number of orders. Additionally, since volume is more important than average rating when it comes to influencing shoppers (even on the category page), you have even more reason to continue boosting review volume.

Maintain a perpetually current perspective

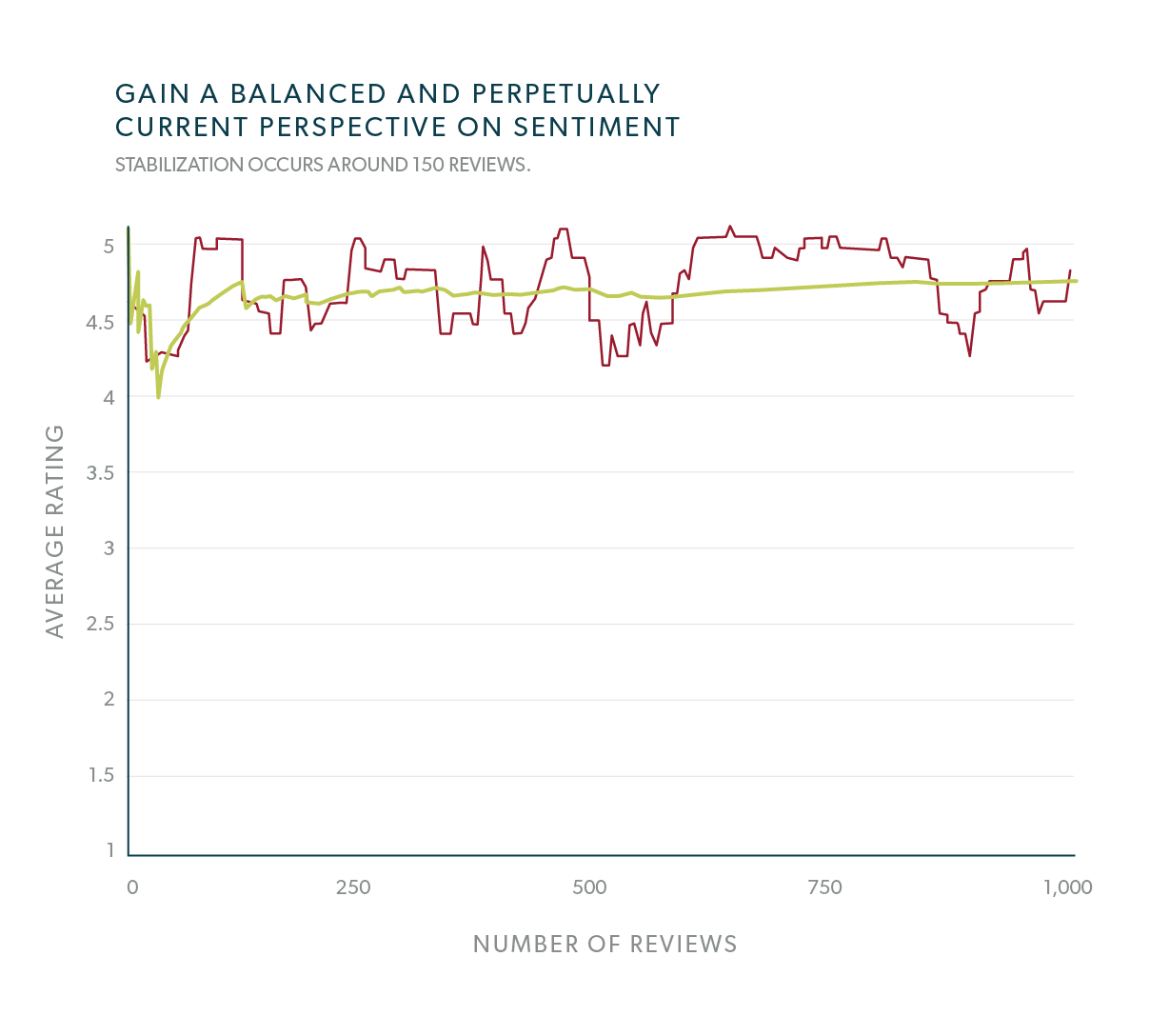

Long after the cumulative average rating has stabilized, customers continue to submit a wide variety of ratings. This diverse feedback can be used to gain both a balanced and perpetually current perspective on your customers’ sentiment. For example, when trending this product’s 20-rating moving average — the average of the previous 20 ratings — we see a healthy distribution of 3-, 4-, and 5-star reviews continuing to flow in. Customer sentiment oscillates over time, but the 4.7 average rating is protected from the short-term swings, having stabilized after 150 reviews.

It is also important to keep a watchful eye throughout the lifecycle of the product. Depending on review volume, an ill-received, mid-cycle change could bring on a spell of negative reviews sizeable enough to drag down the average rating more noticeably. If you’re actively detecting negative feedback, you’ll be quicker to right the ship by making product enhancements or improving your content collection strategy early.

It is also important to keep a watchful eye throughout the lifecycle of the product. Depending on review volume, an ill-received, mid-cycle change could bring on a spell of negative reviews sizeable enough to drag down the average rating more noticeably. If you’re actively detecting negative feedback, you’ll be quicker to right the ship by making product enhancements or improving your content collection strategy early.

Not all products are created equal:

Curious to discover how the nature of rating stabilization varies by industry, we replicated this analysis on a few different product categories, using a sample of products from a client in each.

The initial data revealed that the stabilization threshold, the time it takes to achieve stabilization, and the amount of products that actually achieved stabilization, do seem to vary by product category.

The consumer electronics products we observed took more reviews to stabilize than the sporting goods or apparel products – with 77 reviews compared to 25 and 27, respectively. However, the consumer electronics products were able to achieve stabilization more quickly than the products in other categories. This could be due to using effective content collection strategies, or perhaps the expressive nature of the reviewers in this category.

Conversely, the broad selection and frequent catalogue turnover of apparel products mean it could take as long as three years to achieve average rating stabilization. Since this is not ideal, apparel retailers and brands should strive to collect more content, and more quickly, so that they can tell whether or not the new garment is a winner or a flop – and then make improvements sooner.

Do average ratings increase with more reviews?

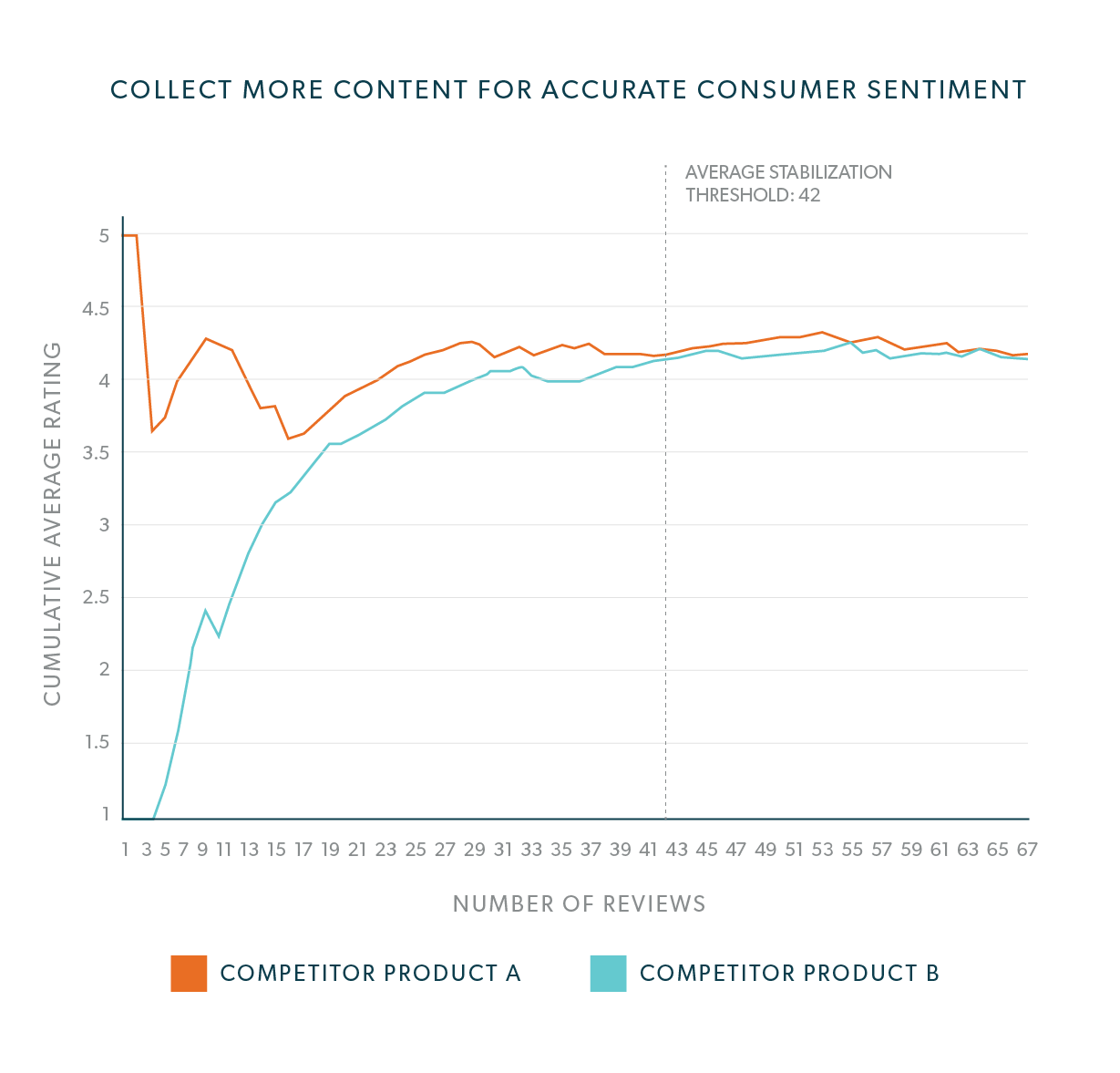

Getting more reviews can lead to better average ratings, but not always. Because average rating is so volatile early on, whether it stabilizes higher or lower really depends on the initial base of ratings that were submitted early on.

For example, this sample depicts how a product can either start with a strong average rating right out of the gate and then stabilize at a lower level, or instead, start with a low average rating and then stabilize at a higher level.

Getting just a handful of reviews isn’t enough to call it quits.

If these competitor brands stopped collecting after getting just 10 reviews, they would have falsely presumed that their own product was the winner (or loser). Collecting more content — enough to achieve a stabilized average rating — revealed that the true consumer sentiment was actually more head-to-head than they originally thought. Now, with a somewhat equal rating, the competitors are off to the races to get more volume than the other. This is so that each can more effectively influence customers on the category page, where their competing products may be listed side-by-side. Plus, customers like more recent reviews, and so does SEO when Google indexes your product pages and detects fresh, dynamic content.

Getting more reviews can be a good thing for average rating — especially for products like Competitor Product B in the above chart — but how you get more is the “X-factor” when it comes to getting better ratings. Content collection strategies like post-purchase e-mail (PPE) tend to bring in more positive reviews because it nudges the satisfied, yet complacent, customers to actually write a review. Whereas, if you don’t proactively ask for content, you’re left waiting on reviewers to come to your site on their own time. Conversely, because anger is a compelling emotion, a dissatisfied customer may be more likely to write a review than one who is content (or even elated) with the product or service.

Takeaways:

- Average rating is highly volatile early on, but tends to stabilize after more reviews are collected.

- Monitor your average ratings, but also consider how many reviews the product has received. You may not have enough content to give you a meaningful perspective on consumer sentiment.

- Don’t stop collecting content on a winning product — even if the rating has stabilized.

- Employ effective volume-driving strategies early so you don’t have to play catch-up with your average ratings later on.

To learn more about the impact of CGC on shopper behavior explore the insights in the Bazaarvoice CGC Index.

This research was conducted by Andrew Minard, Lead Data Analyst, and Miles Carrington, Data Analyst, of the Bazaarvoice Social Analytics department